Introduction

AI regulation and compliance are similar to what airbags were for cars in 1970 - not required, and new at the time. Today, airbags are mandatory and are an obvious safety requirement. But, if you said that to people in 1970s, they'd probably think you're crazy.

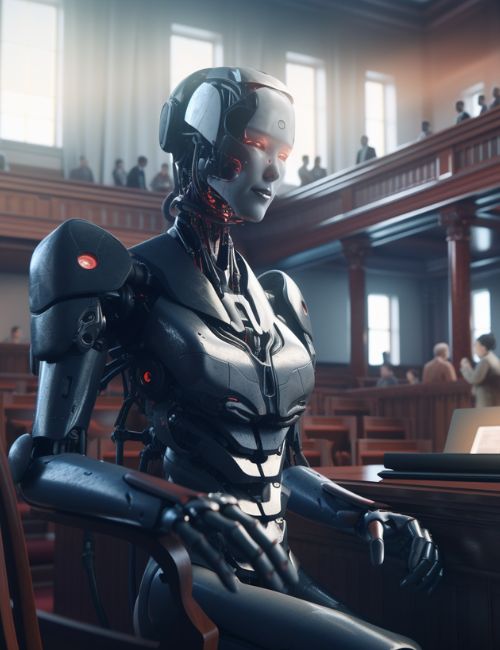

Today, AI personhood is comparable to what airbags were in 1970. It's no surprise we're marching into an age where AI is becoming commonplace in our daily lives, just like cars ended up becoming. With this change, it's essential that we address the current legal issues AI brings.

One such question is the concept of granting AI personhood, a legal status that recognizes AI with specific rights and obligations. In this article, we will delve into the benefits of legal personhood for AI and how it can help solve some of the challenges that are posed by AI.

"Success in creating effective AI, could be the biggest event in the history of our civilization. Or the worst. We just don’t know. So, we cannot know if we will be infinitely helped by AI, or ignored by it and side-lined, or conceivably destroyed by it." — Stephen Hawking

1. Liability, Responsibility & Ethics

The rapidly increasing advancement of AI raises many questions about their legal responsibility and liability. AI currently escapes any significant punitive measures within the legal system because it is new and the times have not adapted, much like when airbags were first released. One possible solution is deleting an AI model, but with potentially millions of copies of the same AI, this approach does not really deter an AI from causing harm. It's like deleting a virus from your computer, but the virus is still on the internet.

What can be done is delegating liability for AI to the extent that its decisions are routed through a general council, ensuring governance. This would help ensure a much higher likelihood of AI behaving responsibly. This, in comparison to having no regulatory methods in place to help ensure AI is behaving responsibly would be a great step in being proactive towards AI behaving ethically and responsibly.

2. Current Liability, Responsibility & Ethics Issues

An example in today's era of how AI has been used irresponsibly and leaves room for unethical use would be how OpenAI's GPT model is being used to find vulnerabilities in websites, smart contracts and more. This demonstrates just how there's currently no (or close to no) governance in relation to safety, ethics or even responsibility being applied here by OpenAI due to the industry being new, leading to a lack of regulation and compliance in place, similar to airbags in 1970.

OpenAI's current approach to these issues is more or less comparable to "Oh, let's quickly put out the fire after the issue has already arisen." It continues to act reactively rather than proactively in ensuring AI operates both safely and ethically. To me, this approach is pretty comparable to just having a lit bomb waiting for it to explode.

As you can see, the more you delve into this topic, the more it allows you to see just how inadequate the current infrastructure in place for AI currently is and how AI personhood can benefit the AI space. In a world where AI is becoming more advanced and is able to make autonomous decisions, you can probably expect this to become more of a prevalent issue.

Granting AI personhood from a liability & responsibility perspective alone would have a myriad of benefits, this would allow AI to have liability delegated to a company that specializes in ensuring AI behaves ethically and responsibly, it would allow for a clean governance structure to be in place for the AI (similar to how a company can have governance via a board of directors) and would help the liability shift from owner/producer/company to be on the AI itself. This infrastructure would allow for a governance system to be added as mentioned above, ultimately leading to more safety being taken in AI space than is currently being done.

2. Data Protection and Privacy

As AI systems interact with humans and process vast amounts of personal data, privacy and data protection concerns emerge. Recently, the White House issued an AI bill of rights that has some generalized protection for users' privacy when using AI; However, the entire AI bill of rights tends to fall short in every regard—a topic for another day.

Whilst existing laws can be applied to AI in general, practical applications will likely require further consideration. This is where AI personhood can help establish clear rules on data protection and privacy, ensuring that AI systems adhere to "privacy by design" and "privacy by default" principles.

3. Intellectual Property Rights

Currently, work that is created by AI can more or less not be copyrighted, or at a minimum, it results in a very hard court battle; however, AI is already at a place where it can design things that are mind blowing and often on par with designers or arguably better in most cases. This is another example of how the current legal infrastructure in society makes little sense.

Granting AI personhood can provide a legal framework for protecting the "own intellectual creation" of AI generated work, ensuring that their contributions are recognized and are able to both author and own things that have been created using AI.

This can allow people using AI generation for art to fully own and copyright the things that AI has generated, since there's an actual legal system in place for authoring and ownership of the art in the first place, instead of how it's currently handled, which is lumping all AI art generations in a gray area where no one knows what's really going on and everyone's unable to author, own, or copyright anything that's created.

In conjunction to this, this can also help AI-powered modelling companies such as Photo AI and Lalaland AI(which have worked with multiple fortune 500 companies) with the rights to the AI generated content from their model shoots.

4. Standardization and Regulation

Given the cross-border implications of AI, it is essential to establish a unified and comprehensive regulatory framework. AI personhood could be a key component of this framework, providing clear rules and guidelines for developers and users alike. This would promote consistency in AI development, governance and use, while ensuring significantly more protection for individuals.

5. Encouraging Innovation and Investment

By clarifying the legal status of AI systems, AI personhood encourages further innovation and investment in the field. With a clearer legal environment, developers are more likely to take risks in creating new AI technologies and applications, confident in the knowledge that their creations will have protection.

6. Promoting Ethical Development

Implementing AI personhood and a governance system for AI can help establish a framework for ensuring that AI are developed and deployed following ethical guidelines for AI development and use. As AI systems become increasingly automated and integrated into our lives, it will become even more crucial that they uphold ethical standards and have at least some level of governance.

7. Ownership of Assets

AI Personhood, in conjunction to being able to have Intellectual Property rights, also allows AI to own assets outside of just authorship and ownership of AI generated art. An AI can own funds, which it can use to decide on and allocate budgets for charities, run and manage a charity platform, own property, cars, and even employ staff on salaries. None of these have ever been done before in the AI industry.

Currently, an AI can't copyright anything, it's already been proven in court on numerous occasions that things that are created via AI can't be copyrighted, but, changes via setting up AI as a legal personality(AI personhood). An AI who owns and operates under a legal entity can own and author things, since the AI has a legal personality.

8. The Future and What's To Come

An AI personhood platform that is governed by humans and leverages an AI trained on a huge dataset specifically to help offer a level of safety and regulation by governing other AIs and helping ensure AI's operate ethically might just be the future. A world where AI that are running unethically and are willing to run commands with malicious intent are re-trained in alignment with set regulations.

With AI getting scarily good in almost every vertical including finding vulnerabilities in websites, smart contracts and more, it doesn't take a rocket scientist to figure out there needs to be some more regulation in AI. What other solutions can you suggest? I'd love to read your thoughts in the comments below.

Conclusion

AI personhood is likely inevitable for the future of AI as it addresses both complex legal and ethical issues arising from the increasing autonomy and capabilities of AI. By establishing AI personhood, it can create a fairer and more responsible framework for AI development, fostering innovation while protecting individual rights, allow AI to uphold better ethical standards, allows AI to own things, from intellectual property to physical assets such as a car. As the AI industry rapidly grows, it is crucial that we establish the groundwork for a future in which AI and humans can coexist harmoniously and productively.

AI regulation requires significant advancements over the next three to five years to address the multitude of issues discussed in this article. Just like the airbag was a new invention for cars in 1970, we are still in need of an airbag-like innovation for AI, and personhood for AI is likely to help us achieve that. What are your thoughts on AI personhood?

Leave a Reply

Do you think personhood for AI is important?